報酬と罰を考慮した安全な強化学習

DOI: 10.1109/DEVLRN.2019.8850699

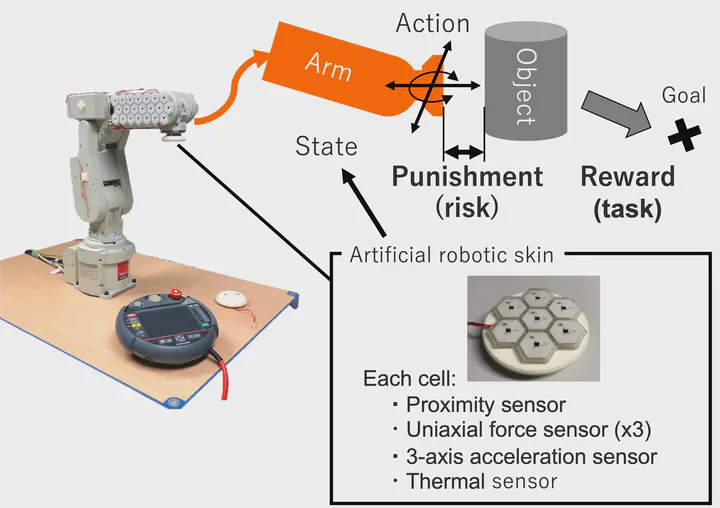

This paper presents a new actor-critic (AC) algorithm based on a biological reward-punishment framework for reinforcement learning (RL), named “RP-AC”. RL can yields capabilities where robots can take over complicated and dangerous tasks instead of human. Such capability, however, may require accounting for safety when achieving the tasks, because dangerous states and actions could cause the robot to breakdown and induce injuries. In the reward-punishment framework, robots gain both positive reward (called reward) as the degree of task achievement and negative reward (called punishment) as the risk of current state and action, which are composed in RL algorithms. In this paper, to control robots more directly, the AC algorithm, which operate in continuous action space, is employed. To this end, we propose policy gradients with the way to compose reward and punishment, more accurately, their value functions. Instead of merely composing them at a fixed ratio, we theoretically introduce immediate reward and punishment into the policy gradients, as animals do in decision making. In pushing task experiments, whereas the vanilla AC fails to acquire the task due to too much emphasis on safety, the proposed RP-AC successfully acquires the task with the same level of safety.